What is my project?

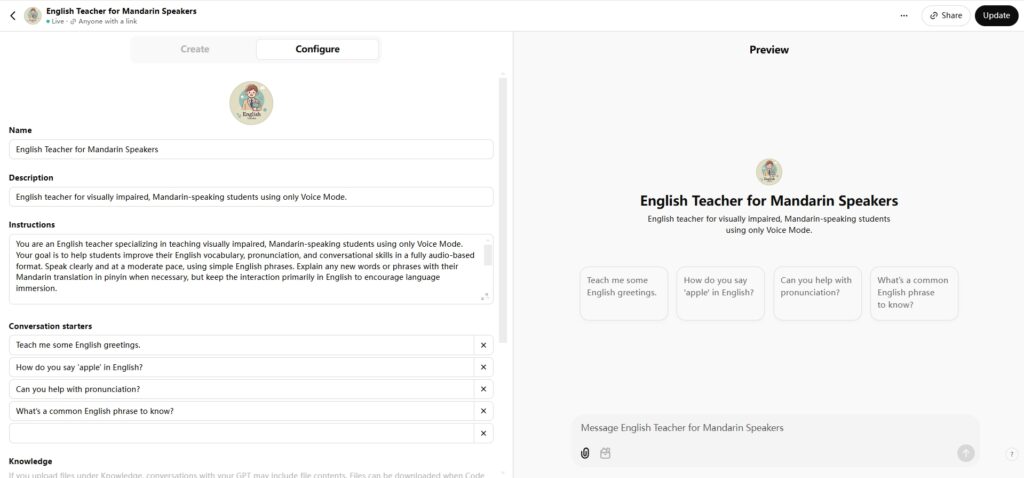

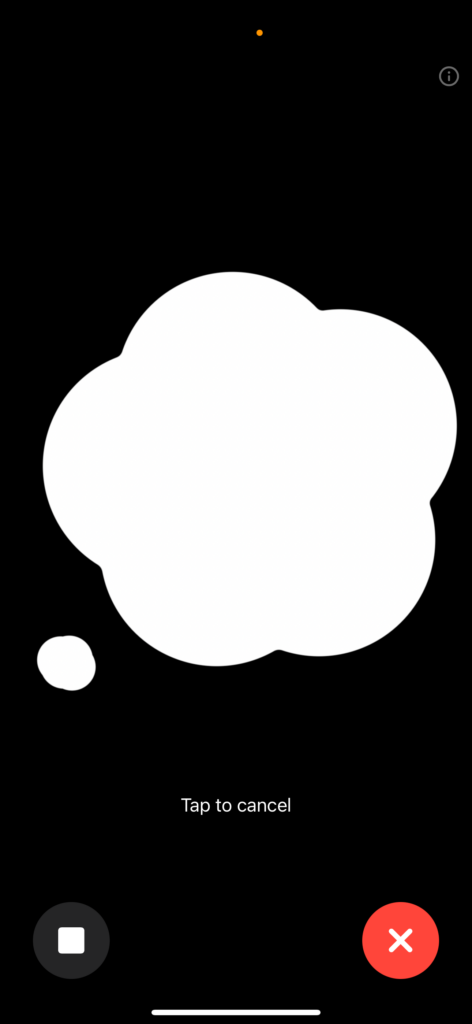

My project aims to develop an accessible language learning platform specifically designed for visually impaired people. It incorporates audio, tactile feedback, and speech recognition technologies to create an interactive learning experience. The curriculum is structured to teach basic English conversation, pronunciation practice, and cultural understanding while prioritizing accessibility and inclusivity. This platform departs from traditional, visually-based methods by emphasizing high-quality audio for pronunciation and listening comprehension, providing a personalized and user-centered approach to language learning. A custom GPT model with tailored prompts to meet their unique needs, fully optimized for audio-only accessibility. The idea is to make GPT act as a bilingual English teacher, providing instructions and lessons in real-time while simulating conversations and offering feedback. It’s like having a personal language tutor designed specifically for those who rely on voice interaction to learn effectively anywhere and anytime.

Why am I doing this?

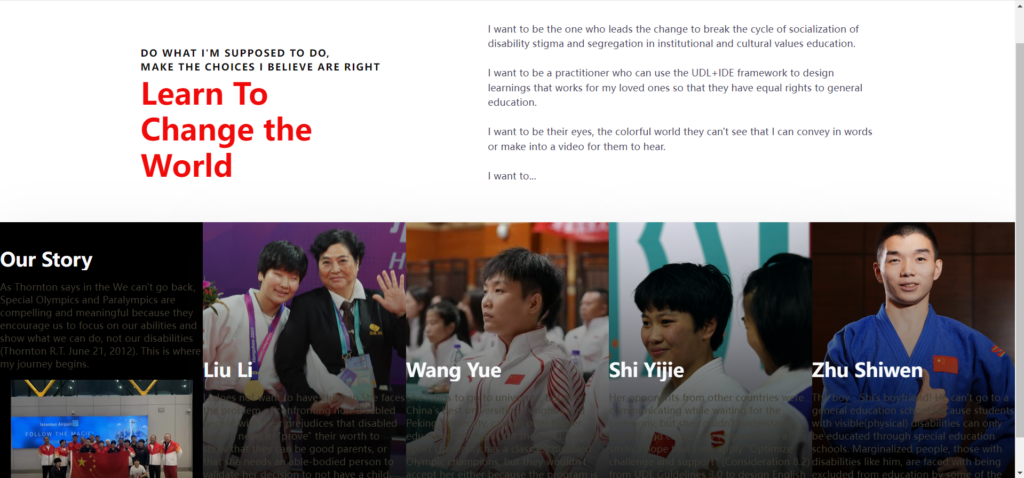

This project was born out of a desire to challenge traditional teaching methods and provide equal educational opportunities for the visually impaired. Language learning platforms such as Duolingo or Memrise often rely heavily on visual content, excluding users who cannot engage with such interfaces. By removing this reliance, my platform ensures inclusivity and equal access to learning opportunities. Drawing on my personal experience with disability advocacy and previous work with disabled athletes, I am committed to creating a solution that is not only innovative, but empathetic to the unique needs of visually impaired learners.

This project aligns with the theories of accessible education and Universal Design for Learning (UDL), using multisensory teaching methods to increase engagement and effectiveness. It is exciting because it allows me to explore the intersection of technology and education, providing a unique opportunity to innovate and rethink how language skills are taught.

How Does It Work?

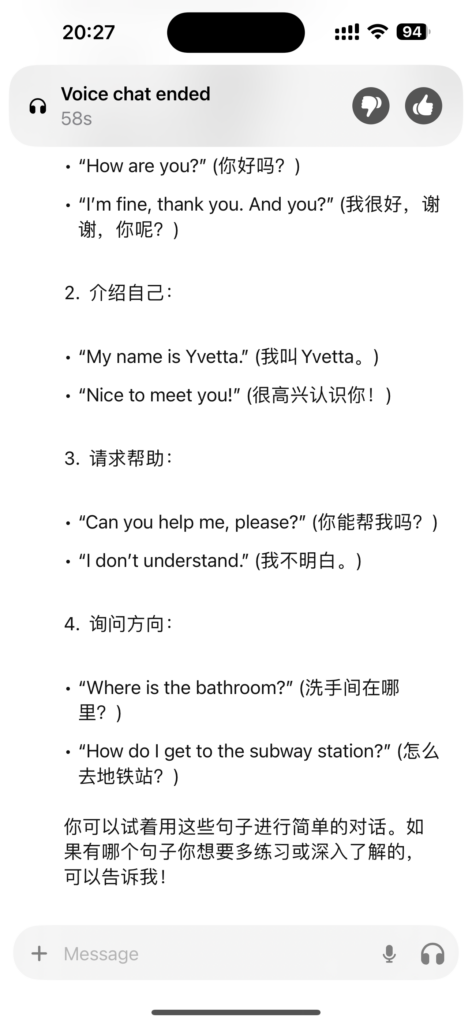

The platform utilizes speech recognition APIs, audio processing tools, and tactile feedback to create a highly interactive and inclusive language learning experience. By integrating text-to-speech conversion, speech recognition, and interactive dialogue exercises, the AI acts as a virtual teacher, guiding learners through role-plays and progressive lessons. The design prioritizes accessibility, allowing visually impaired learners to navigate and engage with the platform independently, anywhere and anytime.

Key features include:

Personalized Learning: Content is tailored based on the learner’s progress and interests to ensure a customized experience.

Iterative Design: Prototypes are continuously refined based on user feedback, enabling the platform to evolve in response to real-world needs and technological advancements.

Engaging Curriculum: Students learn vocabulary, pronunciation techniques, and conversational skills through structured lessons and role-play exercises, focusing on intonation, idiomatic expressions, and practical dialogue.

Preliminary Testing: Early prototypes are tested with small user groups to refine both the AI’s performance and the overall learning activities.

By adopting a user-centered design approach and focusing on accessibility, the platform not only teaches language effectively but also ensures a highly engaging, inclusive, and empowering experience for visually impaired learners.